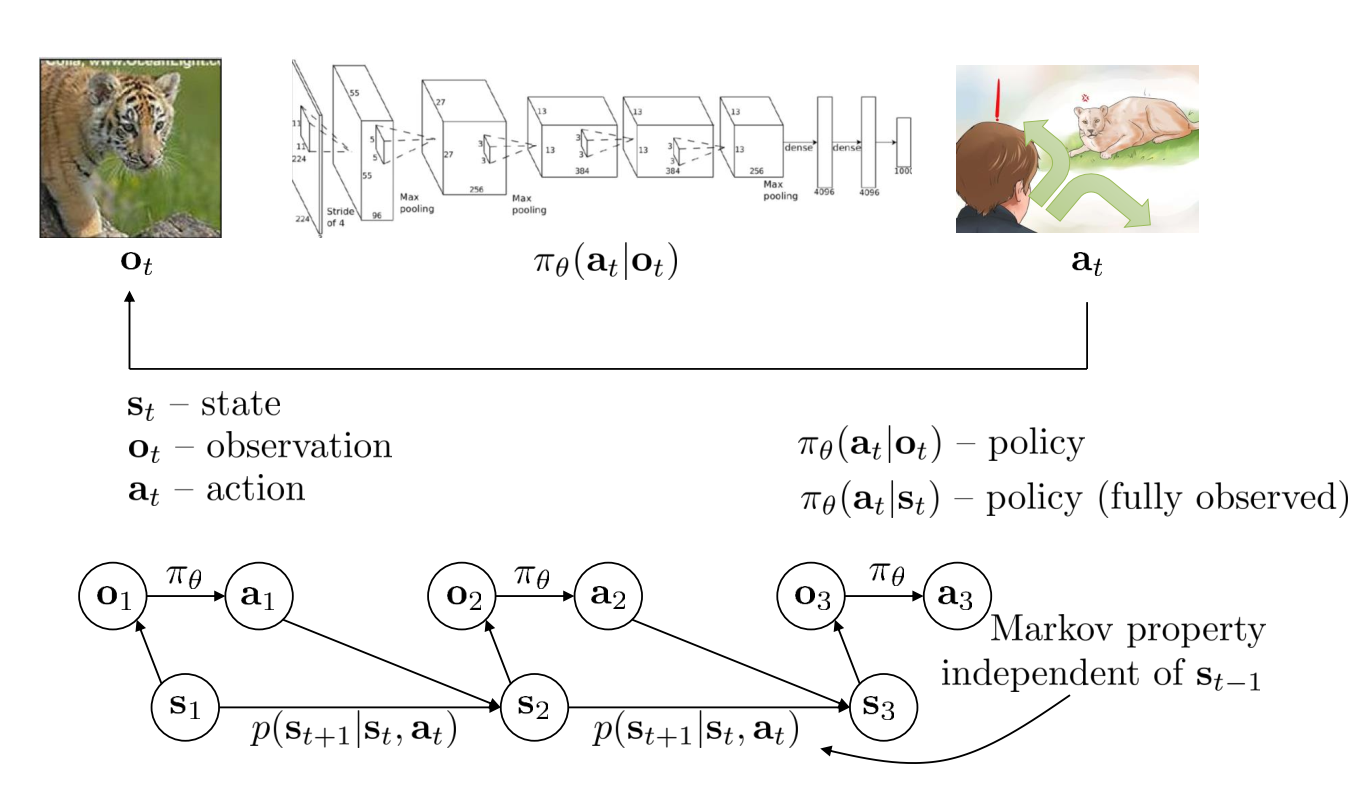

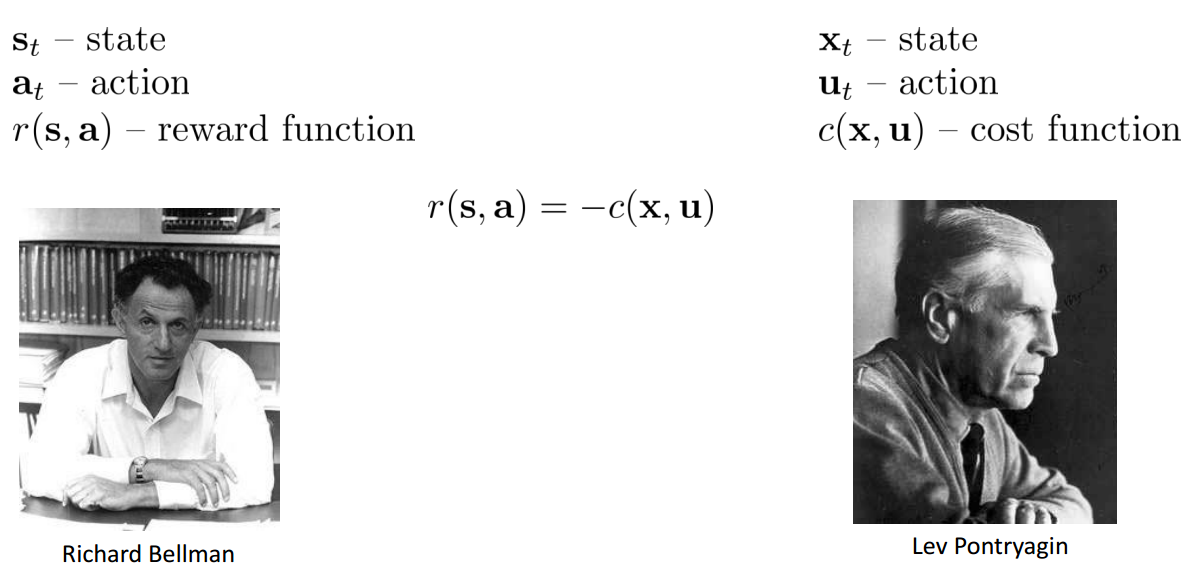

Terminology & Notation

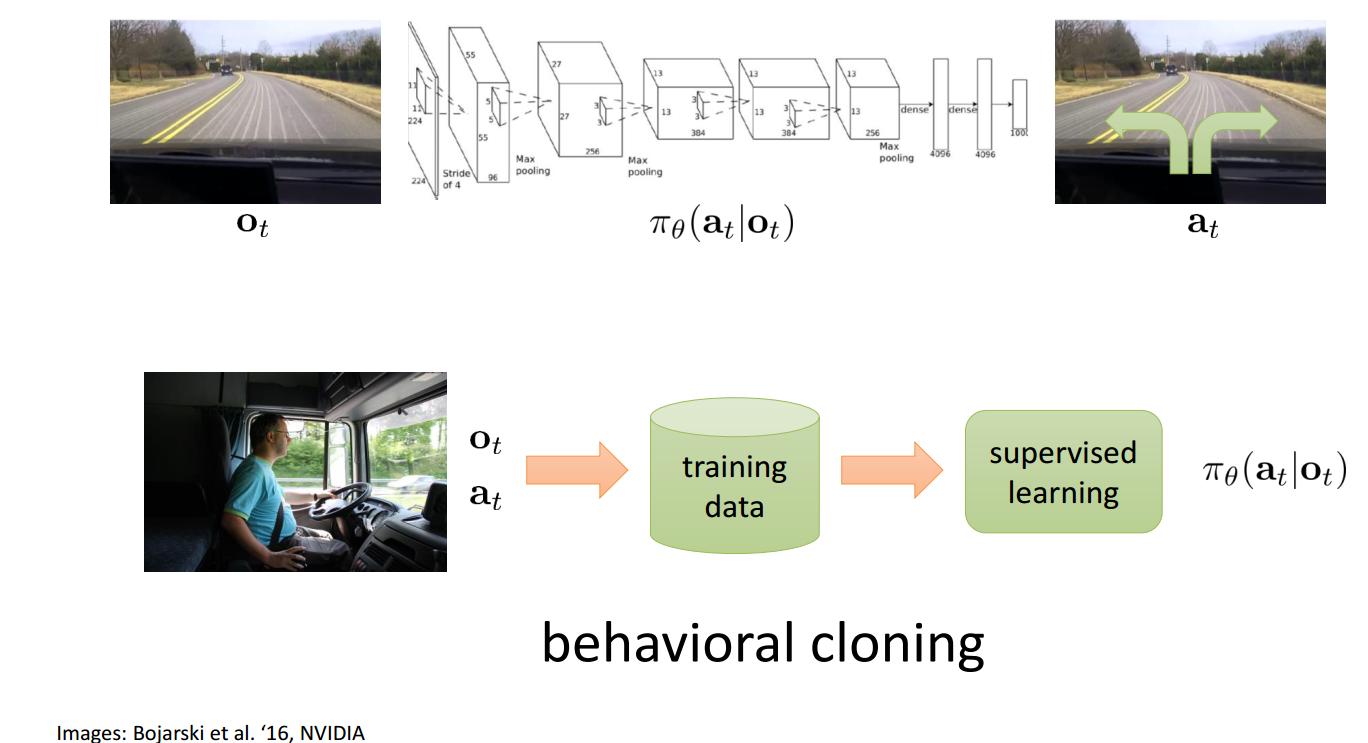

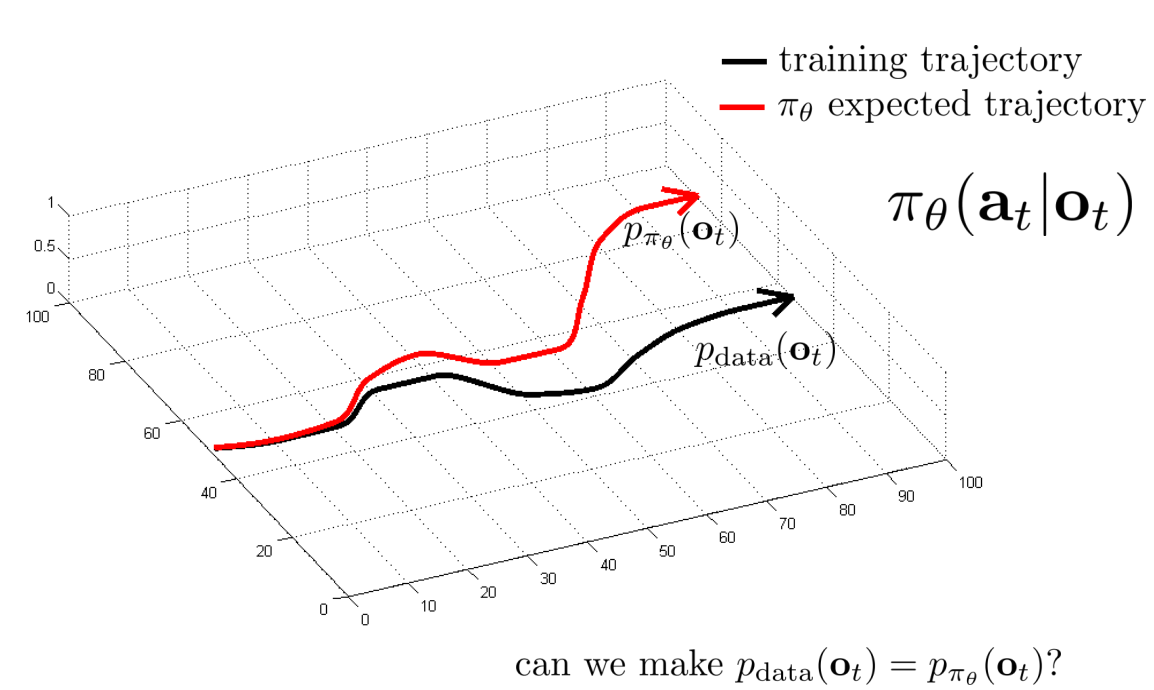

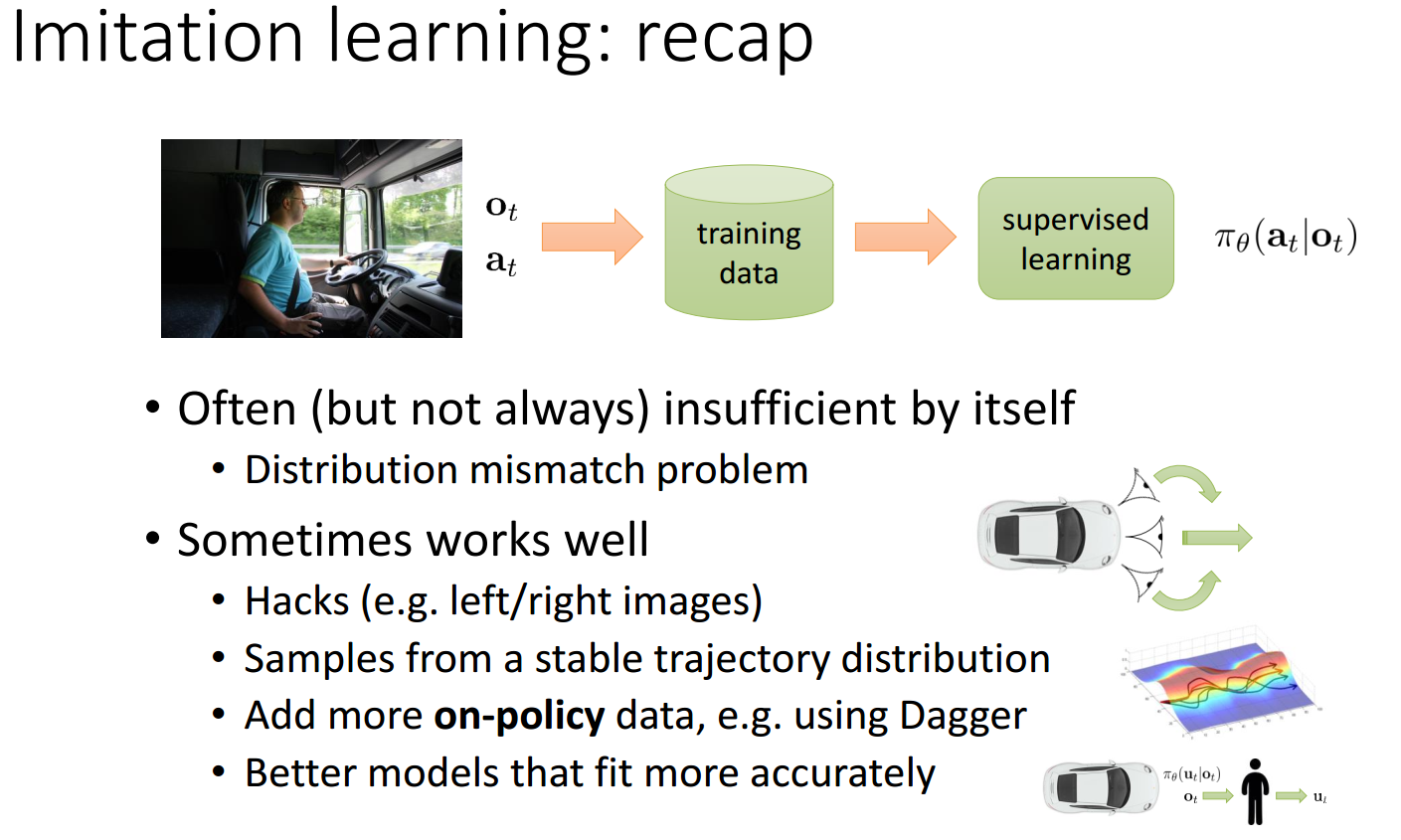

Imitation Learning

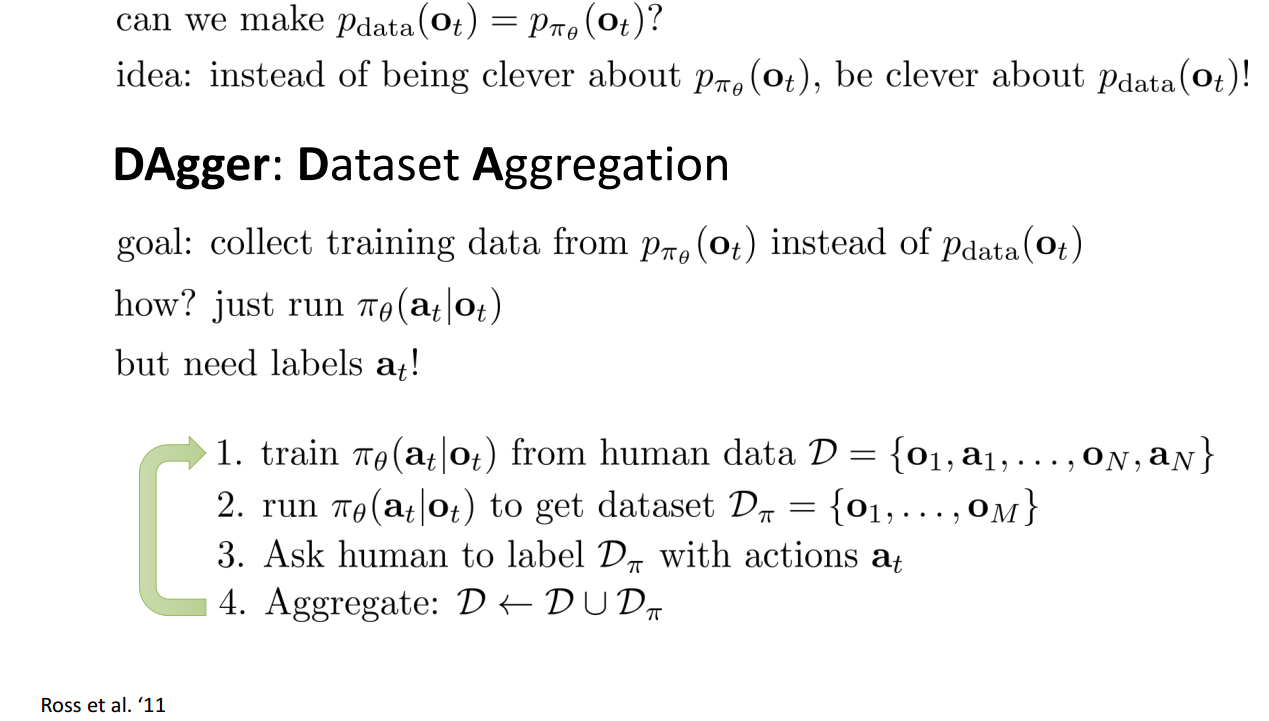

DAgger: Dataset Aggregation

DAgger Needs humans to label the data.

DAgger addresses the problem of distributional “drift”.

What if our model is so good that it doesn’t drift?

- Need to mimic expert behavior very accurately

- But don’t overfit!

Imitation learning: what’s the problem

- Humans need to provide data, which is typically finite

- Deep learning works best when data is plentiful

- Humans are not good at providing some kinds of actions

- Humans can learn autonomously; can our machines do the same?

- Unlimited data from own experience

- Continuous self-improvement

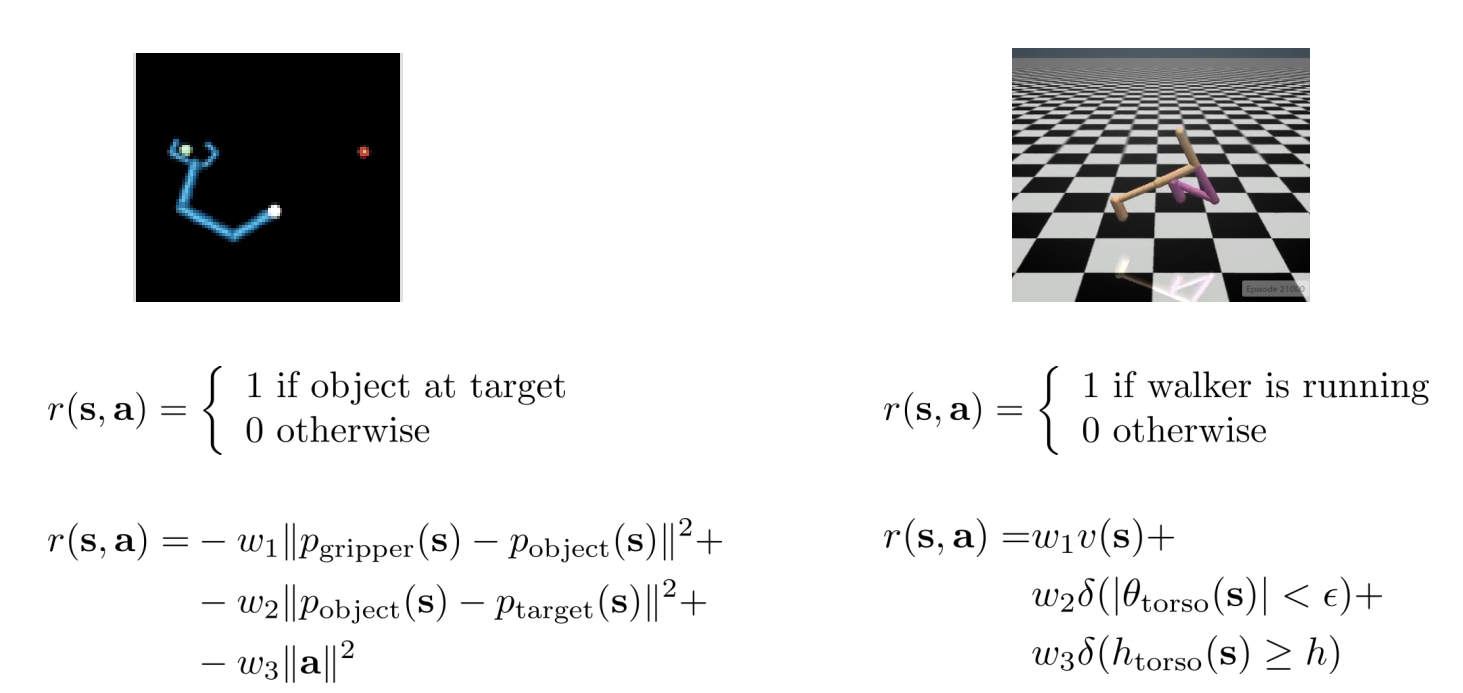

Cost Function

The goal is to:

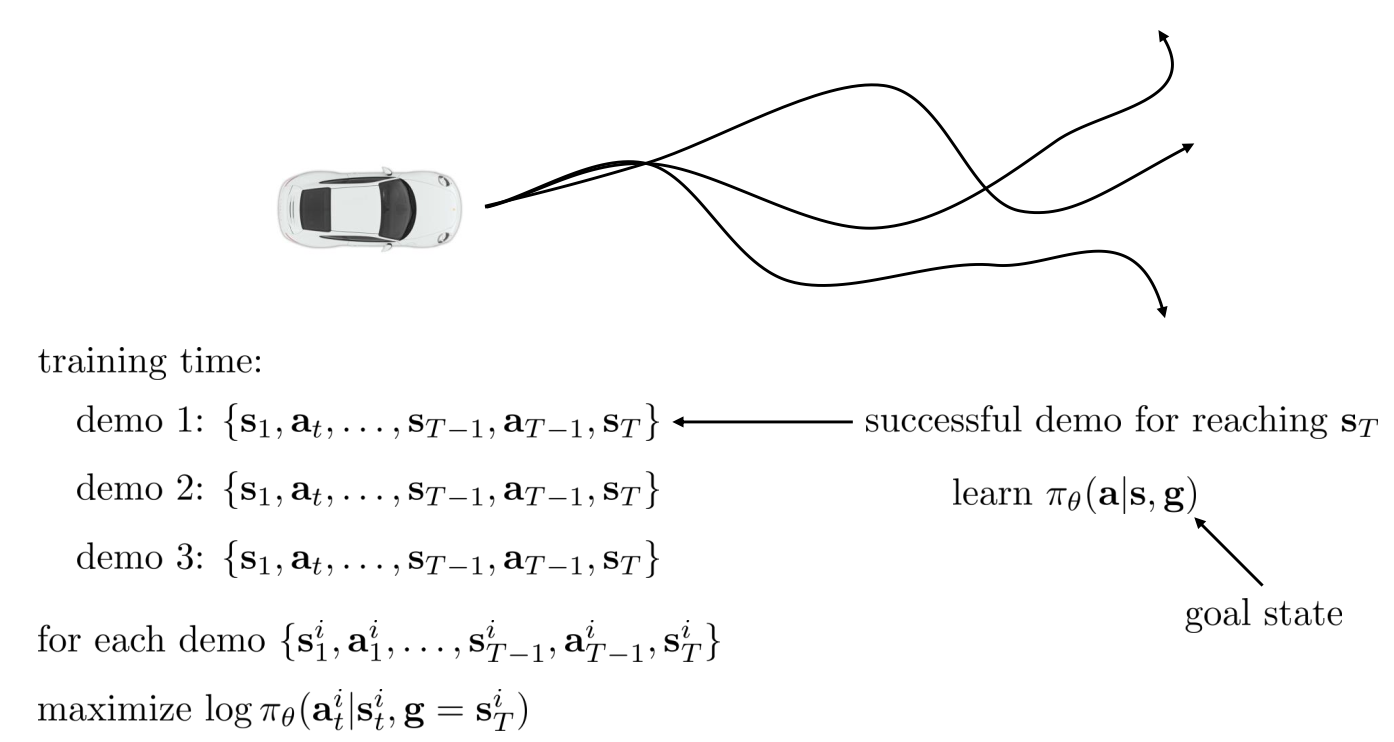

Goal-Conditioned Behavioral Cloning

See more from: Learning Latent Plans from Play

Cost/reward Functions in Theory and Practice

Note: Cover Picture