What is CNN

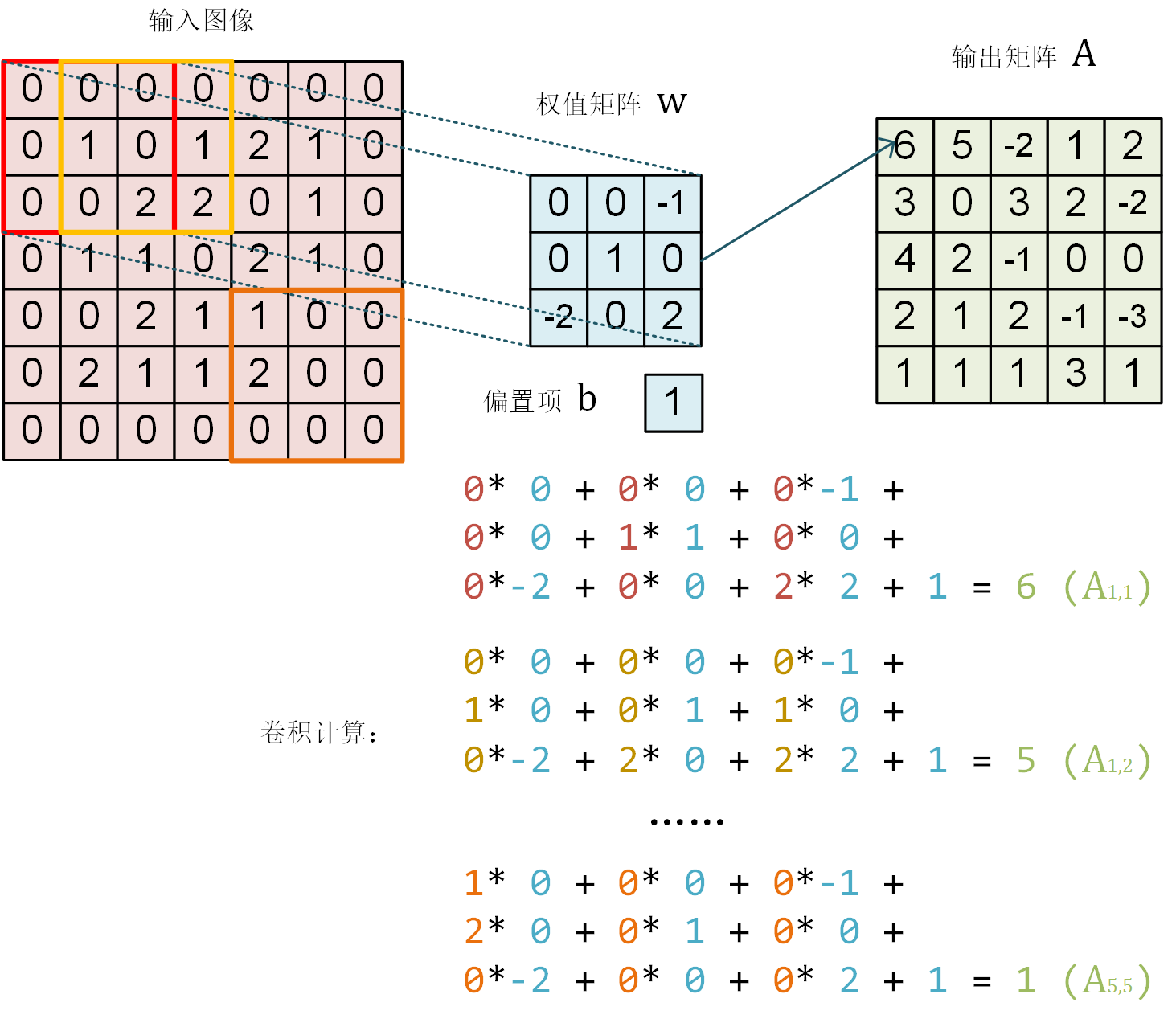

简单的卷积操作:

其他卷积操作详见:

Feature Maps

每一层输入x都是[b,h,w,c]shape大小的Feature maps,代表有b张图片,高宽是(h,w),c代表channel,通常情况下,是将(h,w)的抽象信息高度浓缩提取到c上表示,如:

(32,32,3 -> (1,1,128)

CNN

详见Code

- Weight sharing

- Sliding window

- Padding & stride

在tensorflow中,某一层CNN的weight和bias的存储格式是:

- Weights: [kernel_size, kernel_size, channels_of_last_layer, num_filters]

- Bias: [num_filters]

Operations

- Pooling/Downsmapling

- Max/Avg pooling

- Upsampling

- nearest

- bilinear

- Relu:将负的数据过滤掉

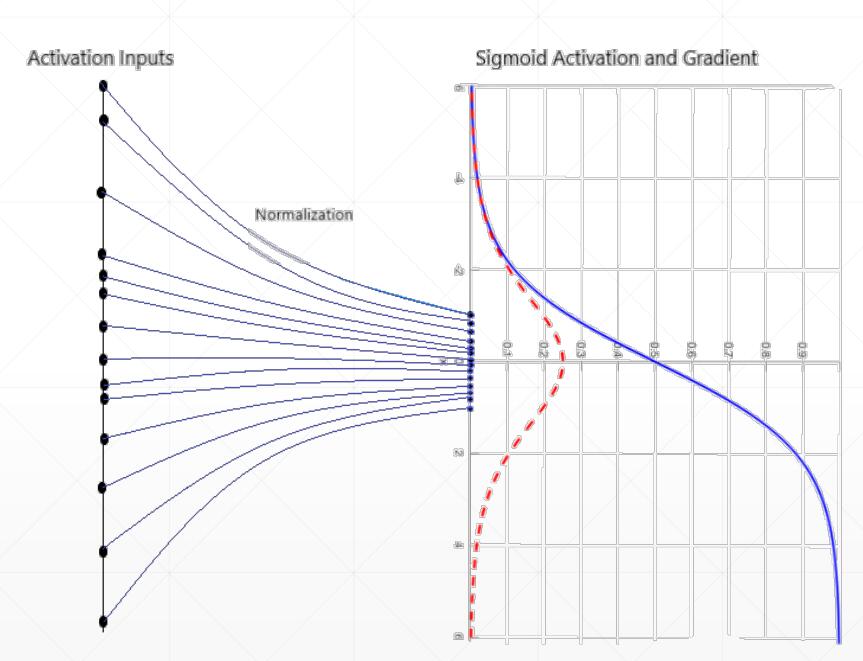

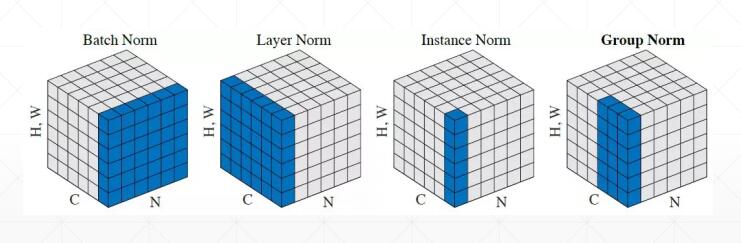

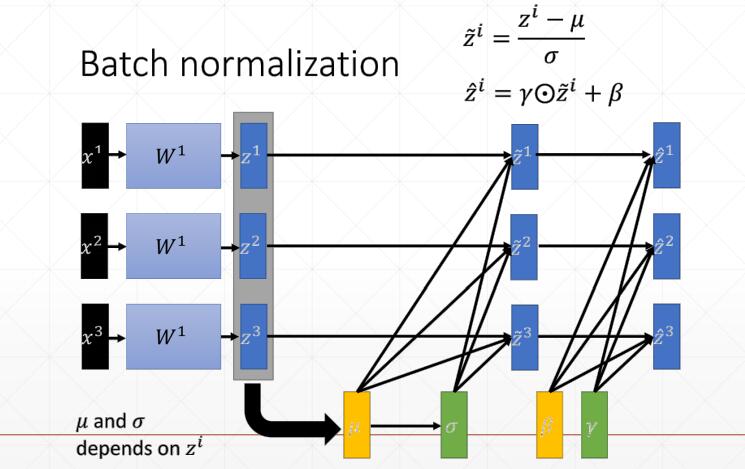

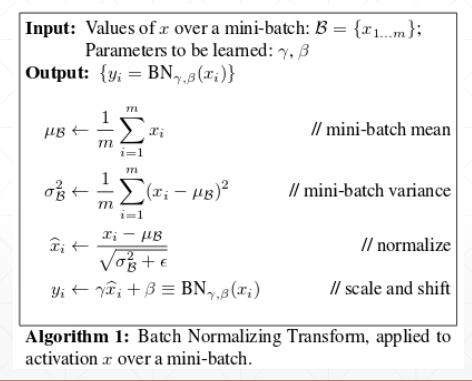

BatchNormalization

由于激活函数的存在梯度消失现象,最好将数据分布调整到靠近0的范围。

将每一层神经网络的输出数据分布调整到N(0,1)正态分布。

Feature Scaling

- Image Normalization

def normalize(x, mean, std):

# [b, h, w, c]

x = x - mean

x = x/ std

return x

- Batch Normalization

- dynamic mean/std

- net=layers.BatchNormalization()

- axis=-1

- center=True

- scale=True

- trainable=True

- net(x, training=None): 测试和训练和dropout一样,需要制定training参数

Advantages of BN

- Converge faster

- Better performance

- Robust

- Stable

- Larger learning rate

Architecture

- LeNet-5: 1980年代

- AlexNet: 2012

- VGG: 2014

- GoogleNet: 2014

- ResNet: 2015

- DenseNet

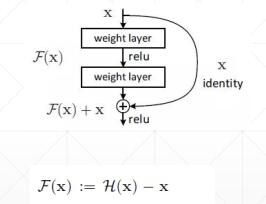

ResNet

The residual module:

- Introduce skip or shortcut connections

- Make it easy for network layers to represent the identity mapping

- For some reason, need to skip at least two layers

Out of Memory

- Decrease batch size

- Tune ResNet [2,2,2,2]

- Buy new NVIDIA GPU Card

使用Keras中预定义的经典卷积神经网络结构

tf.keras.applications中有一些预定义好的经典卷积神经网络结构,如 VGG16 、 VGG19 、 ResNet 、 MobileNet等。我们可以直接调用这些经典的卷积神经网络结构(甚至载入预训练的参数),而无需手动定义网络结构。

model = tf.keras.applications.MobileNetV2()

一些共同的常用参数如下:

- input_shape :输入张量的形状(不含第一维的 Batch),大多默认为

224 × 224 × 3。一般而言,模型对输入张量的大小有下限,长和宽至少为32 × 32或75 × 75; - include_top :在网络的最后是否包含全连接层,默认为

True; - weights :预训练权值,默认为 ‘imagenet’ ,即为当前模型载入在 ImageNet 数据集上预训练的权值。如需随机初始化变量可设为

None; - classes :分类数,默认为 1000。修改该参数需要 include_top 参数为

True且 weights 参数为None。

import os

import tensorflow as tf

import tensorflow_datasets as tfds

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

os.environ['CUDA_VISIBLE_DEVICES'] = '2'

num_batches = 1000

batch_size = 50

learning_rate = 1e-3

dataset = tfds.load('tf_flowers', split=tfds.Split.TRAIN, as_supervised=True)

dataset = dataset.map(lambda img, label: (tf.image.resize(img, [224, 224]) / 255., label)).shuffle(1024).batch(32)

model = tf.keras.applications.MobileNetV2(weights=None, classes=5)

optimizer = tf.keras.optimizers.Adam(learning_rate=learning_rate)

for images, labels in dataset:

with tf.GradientTape() as tape:

labels_pred = model(images)

loss = tf.keras.losses.sparse_categorical_crossentropy(y_true=labels, y_pred=labels_pred)

loss = tf.reduce_sum(loss)

print(f'loss: {loss.numpy()}')

grads = tape.gradient(loss, model.trainable_variables)

optimizer.apply_gradients(zip(grads, model.trainable_variables))

Reference

Note: Cover Picture